StableDrag-GAN

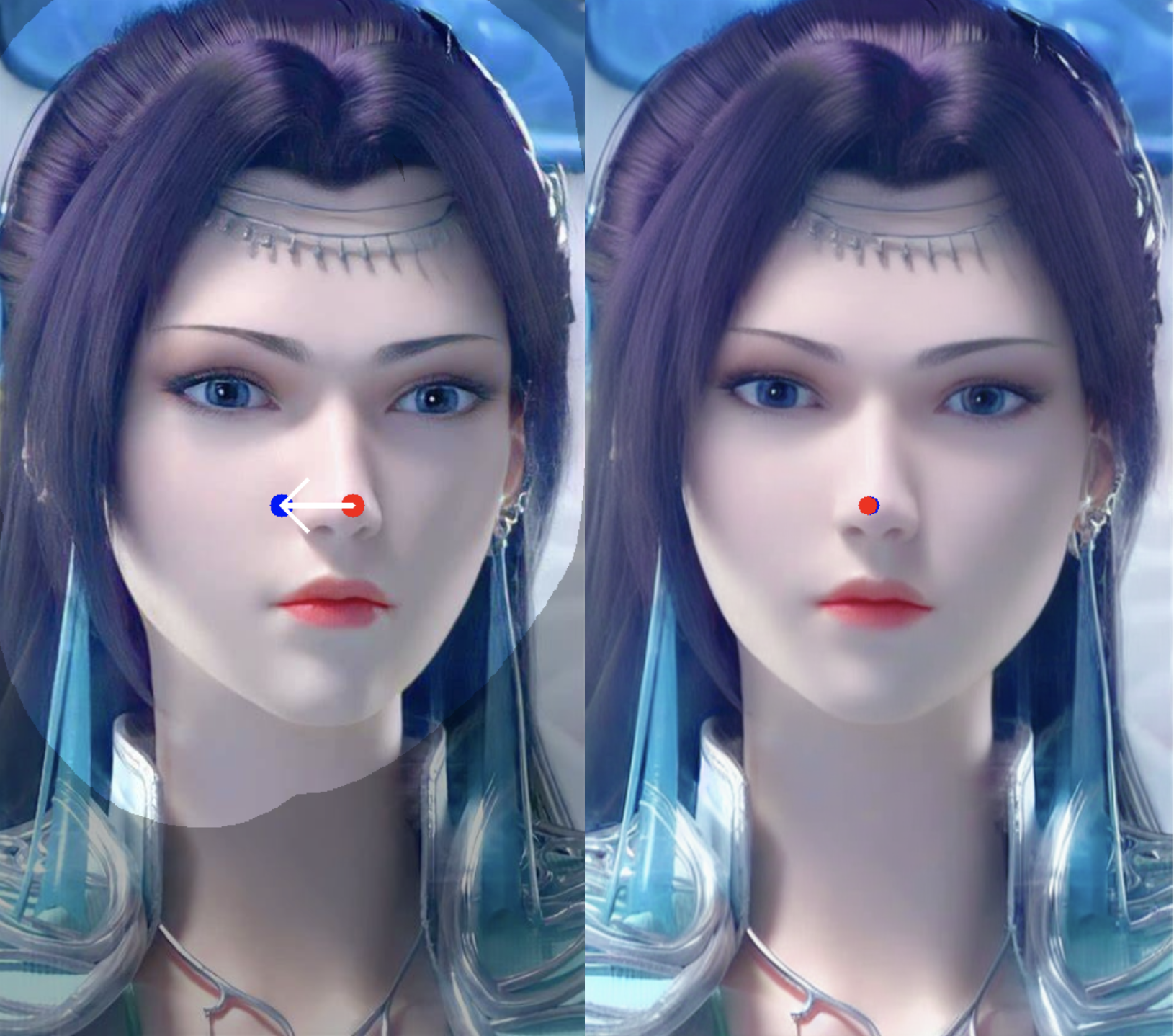

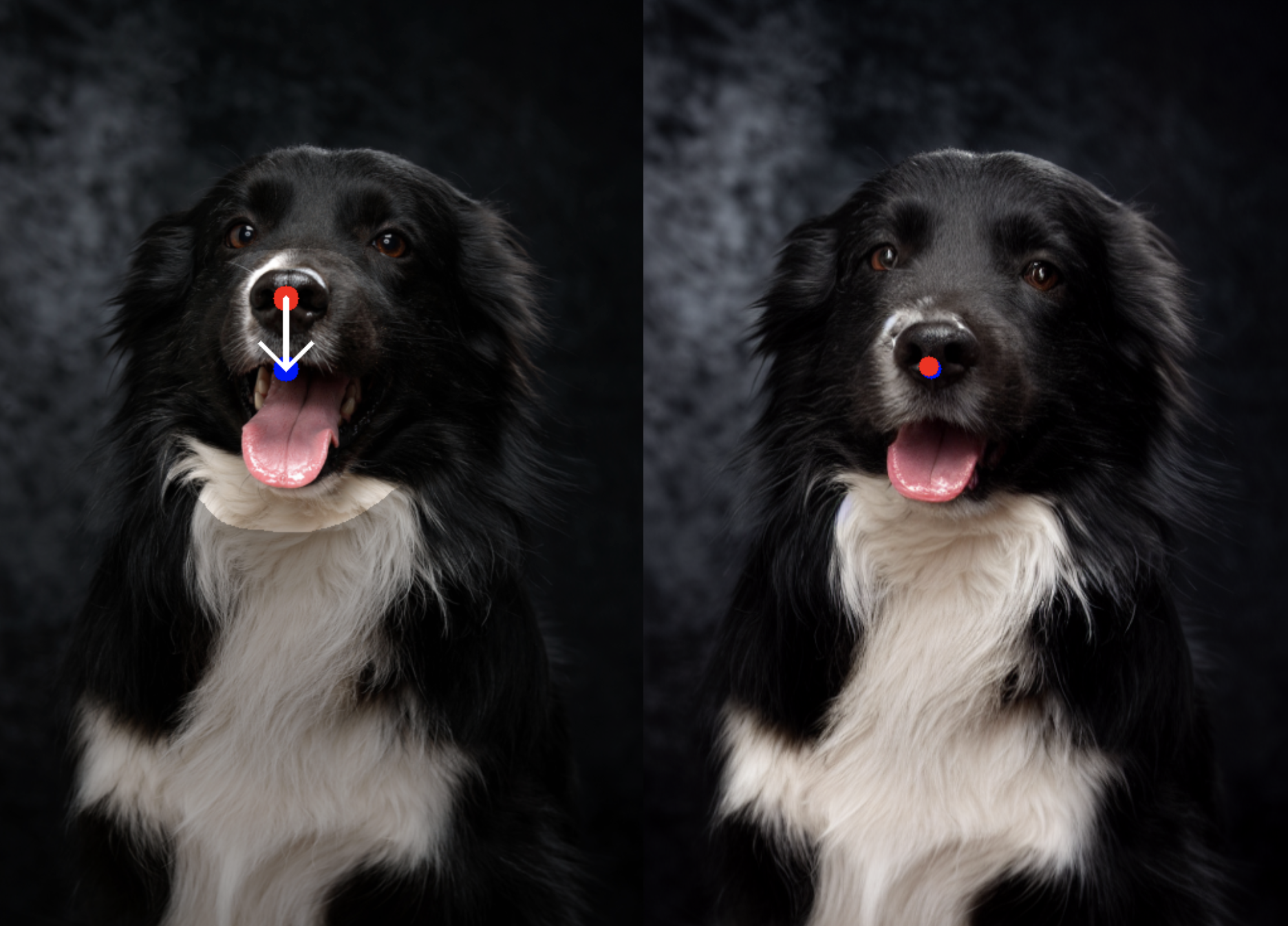

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

StableDrag-GAN

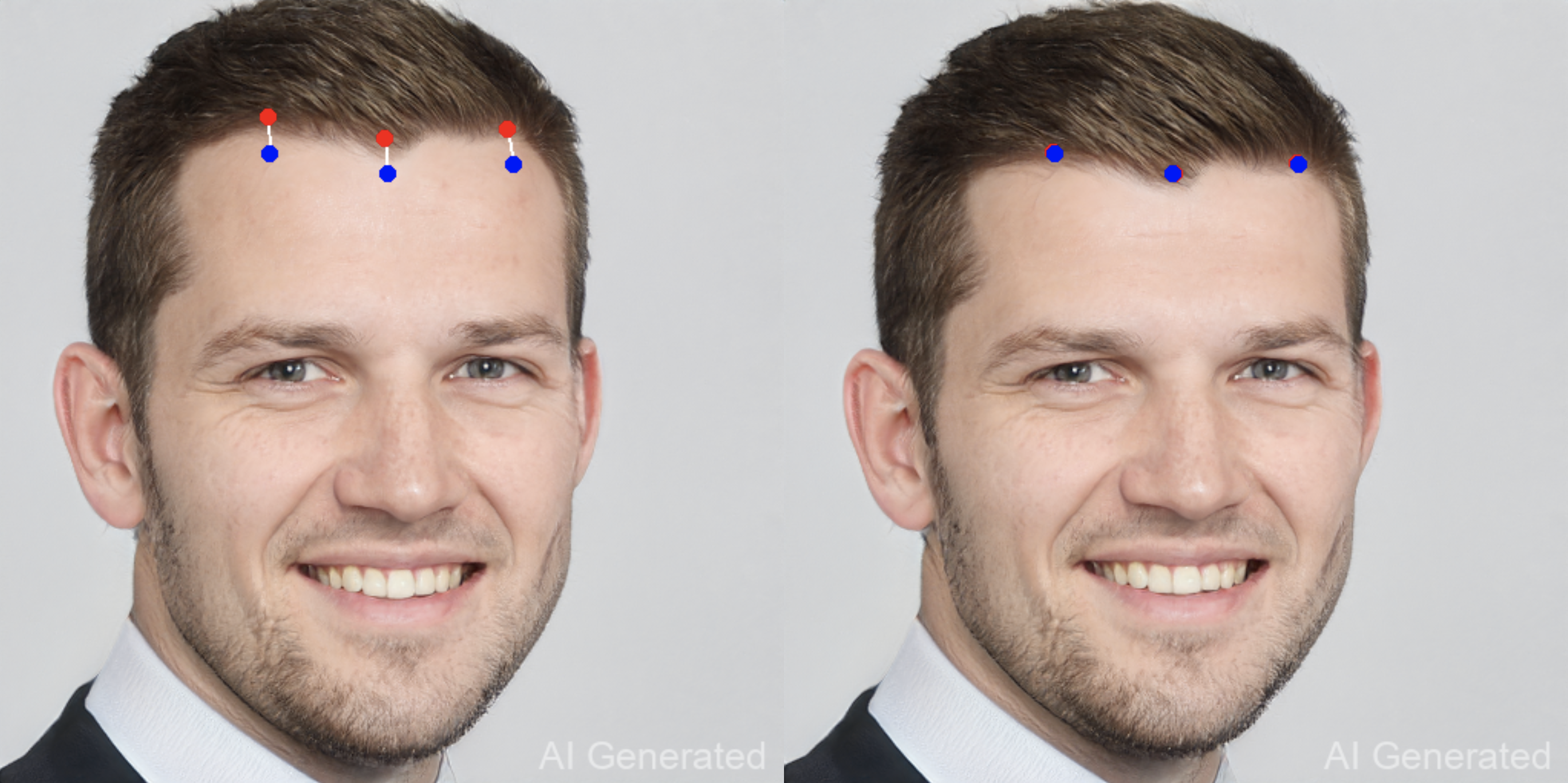

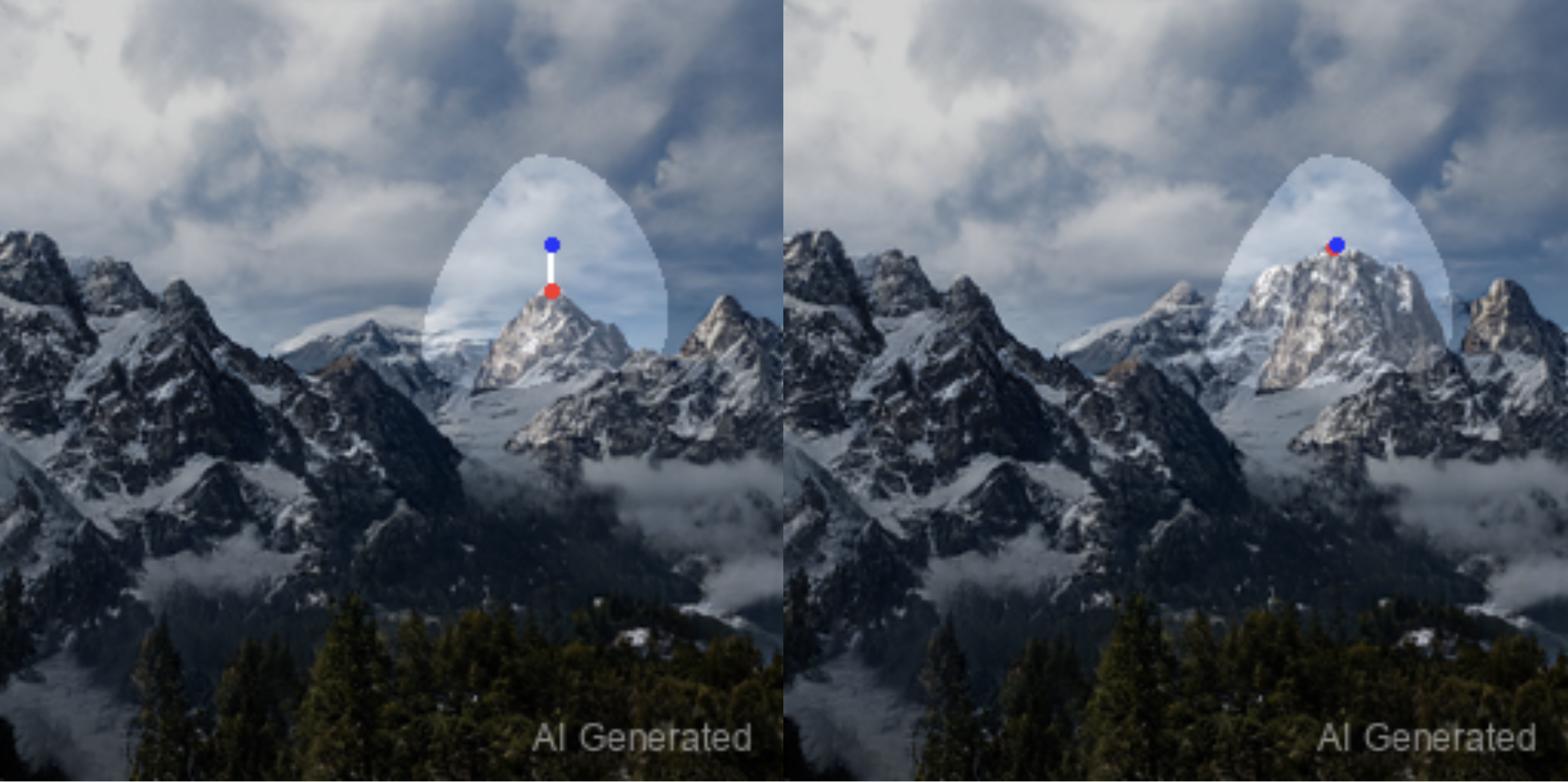

StableDrag-Diff

StableDrag-Diff

StableDrag-Diff

Abstract

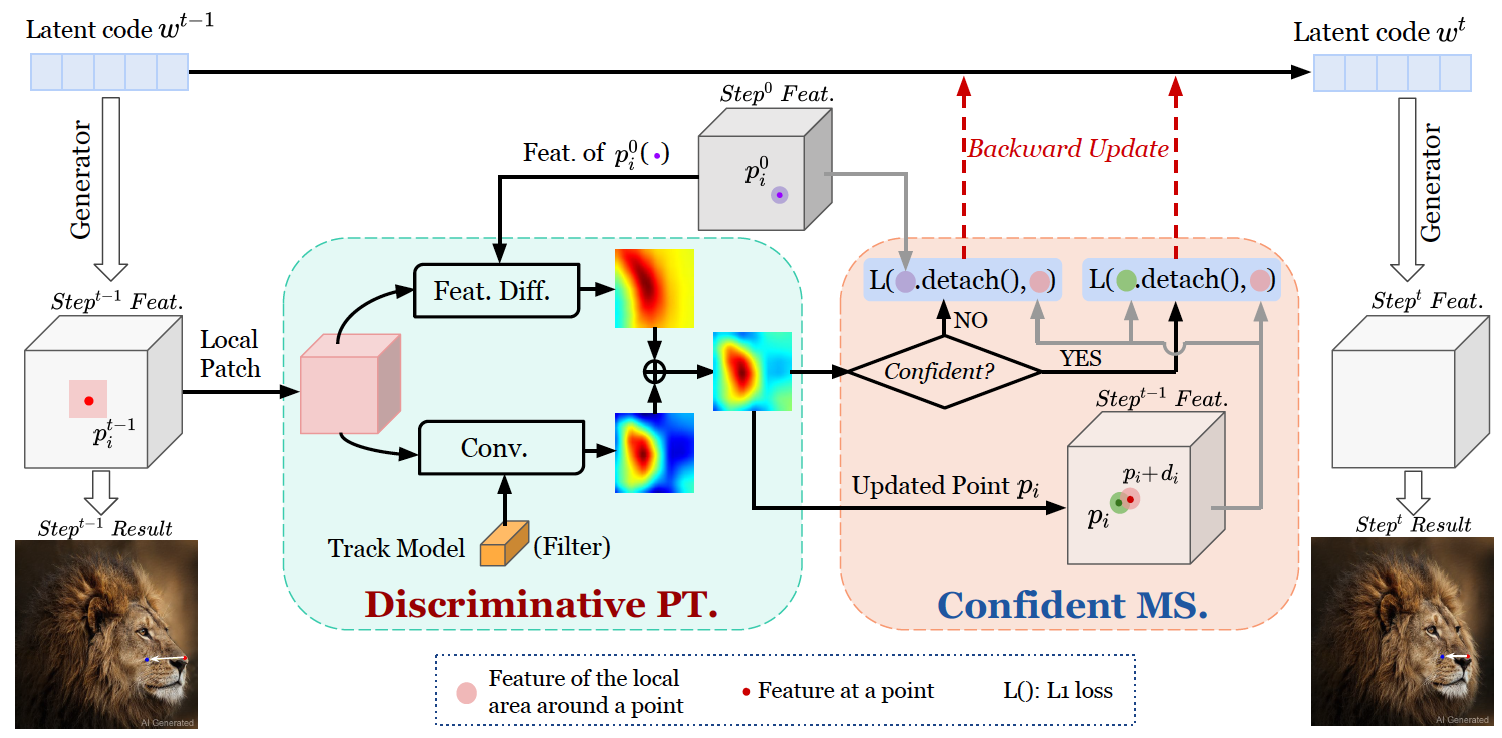

Point-based image editing has attracted remarkable attention since the emergence of DragGAN. Recently, DragDiffusion further pushes forward the generative quality via adapting this dragging technique to diffusion models. Despite these great success, this dragging scheme exhibits two major drawbacks, namely inaccurate point tracking and incomplete motion supervision, which may result in unsatisfactory dragging outcomes. To tackle these issues, we build a stable and precise drag-based editing framework, coined as StableDrag, by designing a discirminative point tracking method and a confidence-based latent enhancement strategy for motion supervision. The former allows us to precisely locate the updated handle points, thereby boosting the stability of long-range manipulation, while the latter is responsible for guaranteeing the optimized latent as high-quality as possible across all the manipulation steps. Thanks to these unique designs, we instantiate two types of image editing models including StableDrag-GAN and StableDrag-Diff, which attains more stable dragging performance, through extensive qualitative experiments and quantitative assessment on DragBench.

More results of our StableDrag

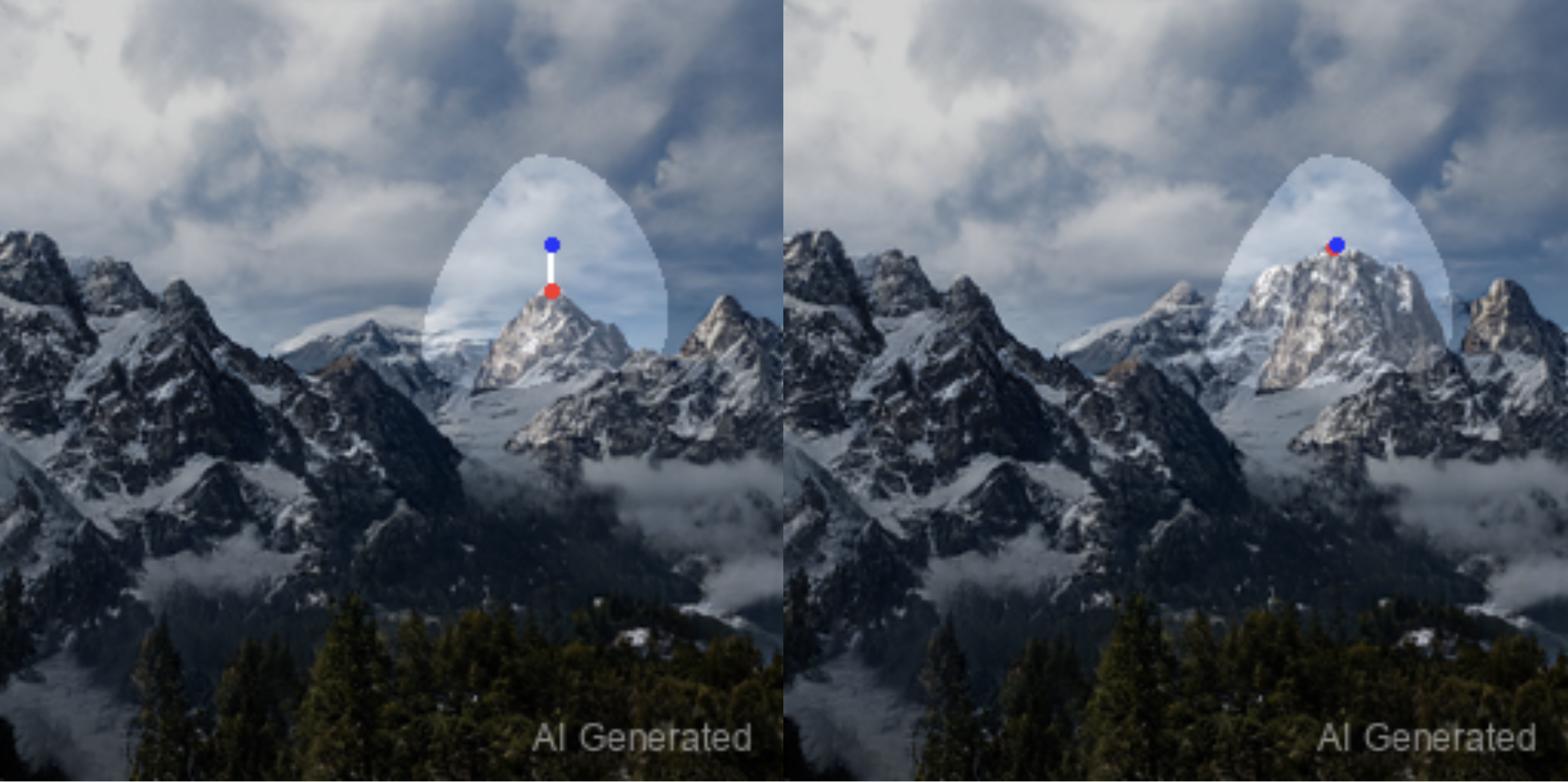

StableDrag-Diff samples

StableDrag-Diff samples

StableDrag-Diff samples

StableDrag-Diff samples

StableDrag-Diff samples

StableDrag-Diff samples

StableDrag-Diff samples

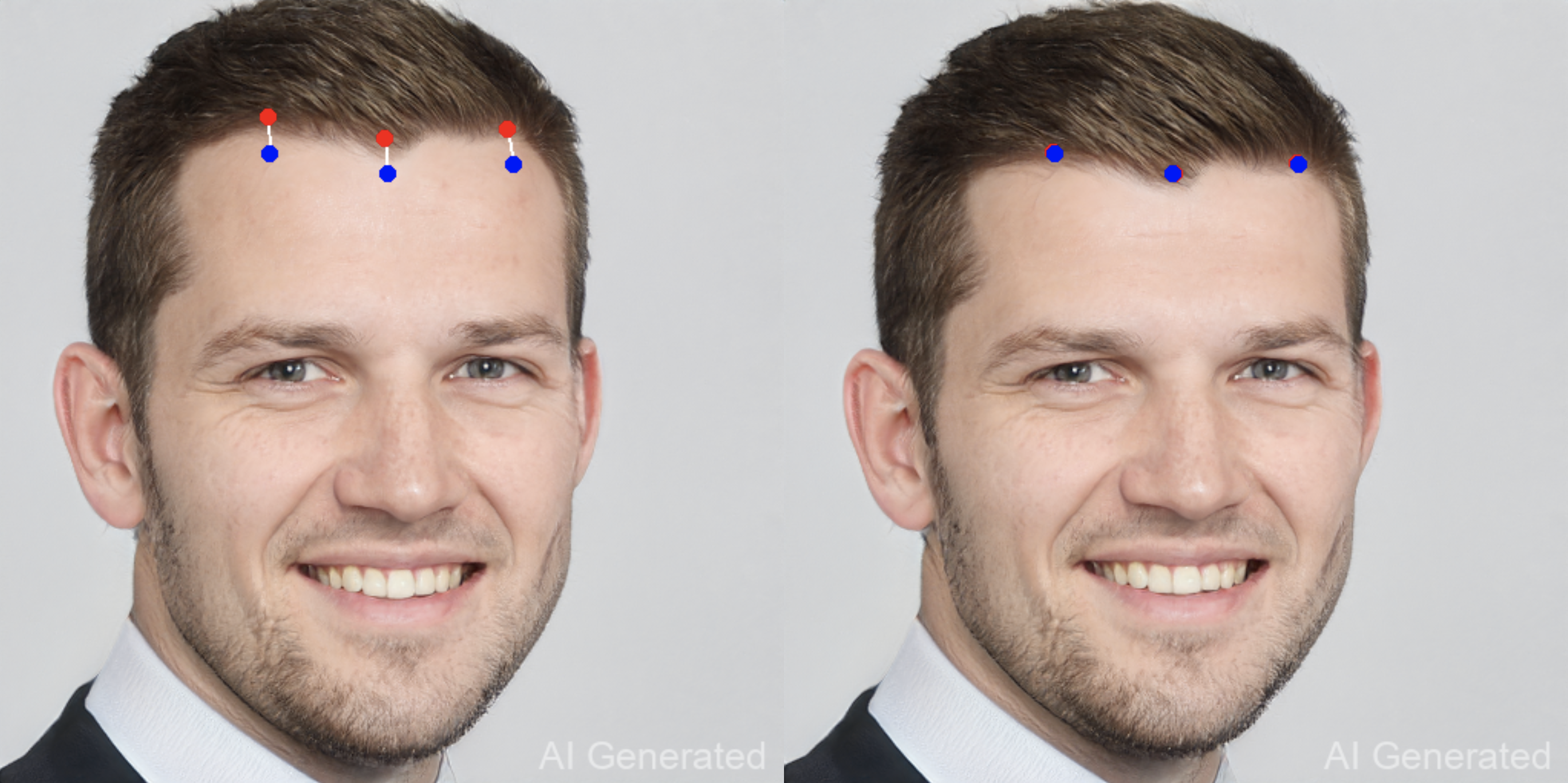

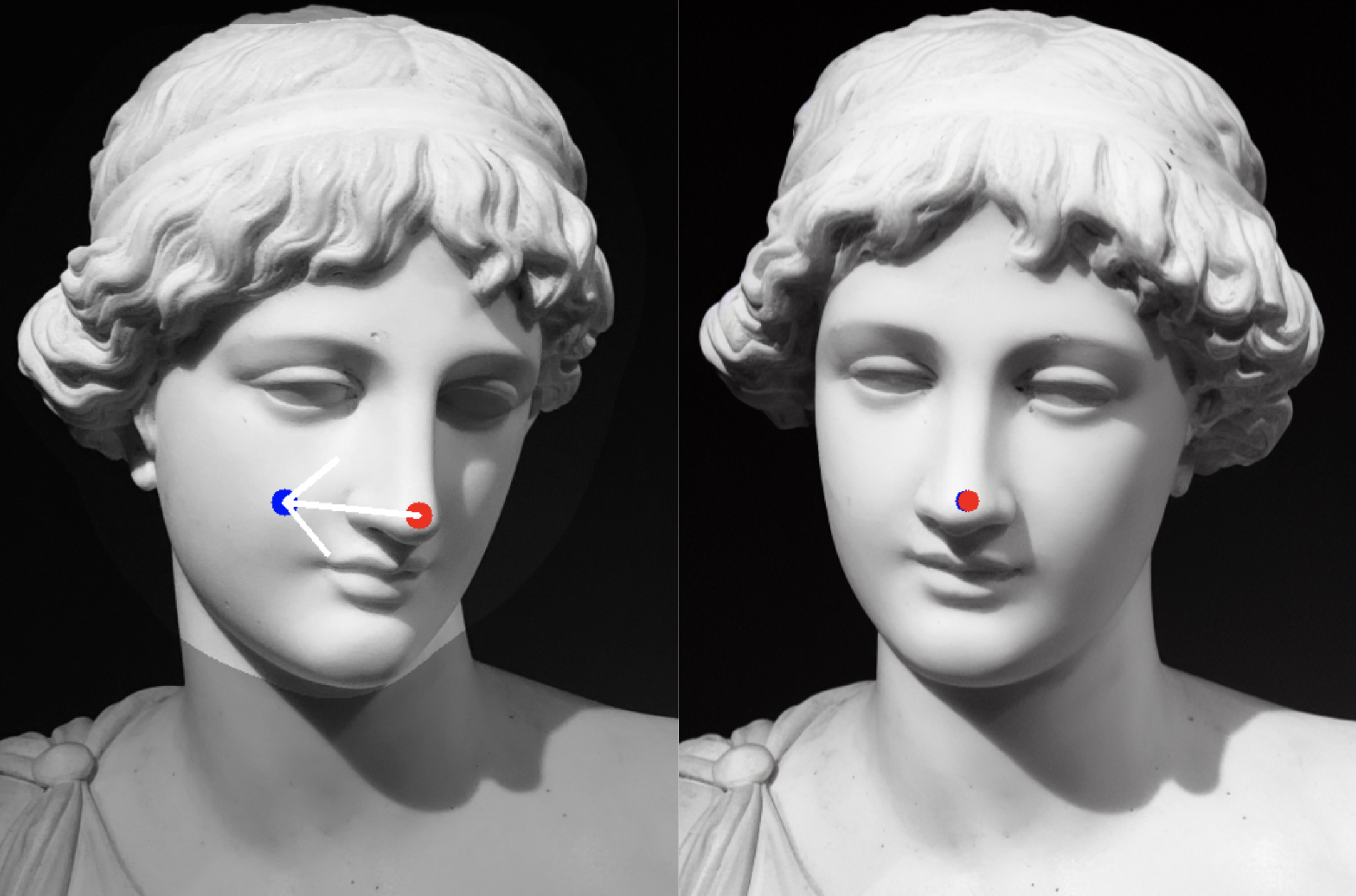

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples

StableDrag-GAN samples